- SGD : Stochastic Gradient Descent, 조금씩 기울기를 수정해나가는 방식으로, 기본에 속하지만 방향이 다른경우 기울기가 달라져 탐색경로가 비효율적. 속도가 후에 나온 옵티마이저보다 훨씬 느린편임.

- Momentum : SGD에 가속변수를 하나 추가하여 탐색이 가속적

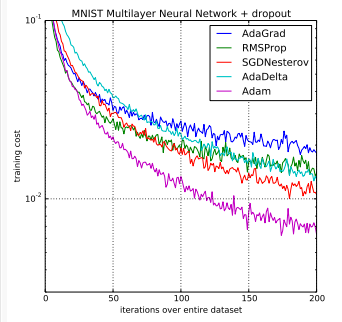

- AdaGrad : 개별 매개변수에 적응적으로 학습률을 조정하면서 학습을 진행. 즉 초반에는 빠르다가 점차 감소해가면서 갱신해감

- RMSProp : 지수 이동평균을 이용해(Exponential Moving Average)초반 기울기보다는 최근의 기울기 정보를 반영해감.

- Adam : 모멘텀과 AdaGrad의 장점을 취한 방법으로 편향 보정이 진행

참고 및 시각화 출처

https://github.com/Jaewan-Yun/optimizer-visualization

https://emiliendupont.github.io/2018/01/24/optimization-visualization/